The Rabbit R1: AI in a Box

The Rabbit R1 is another AI gadget in a box, and it’s definitely part of the trend we’re seeing right now. It shares some similarities with the Humane AI Pin—both good and bad—but there are a few features here that are supposed to set it apart. At least, that’s the idea. Let’s take a look.

AI in a Box

Sound familiar? This time, though, it’s not a wearable device. Instead, it’s a portable AI assistant that you carry around in your pocket, much like a smartphone. It’s about the size of a small stack of Post-it notes and comes in a lightweight plastic cube design by Teenage Engineering, a brand that’s been getting a lot of attention lately.

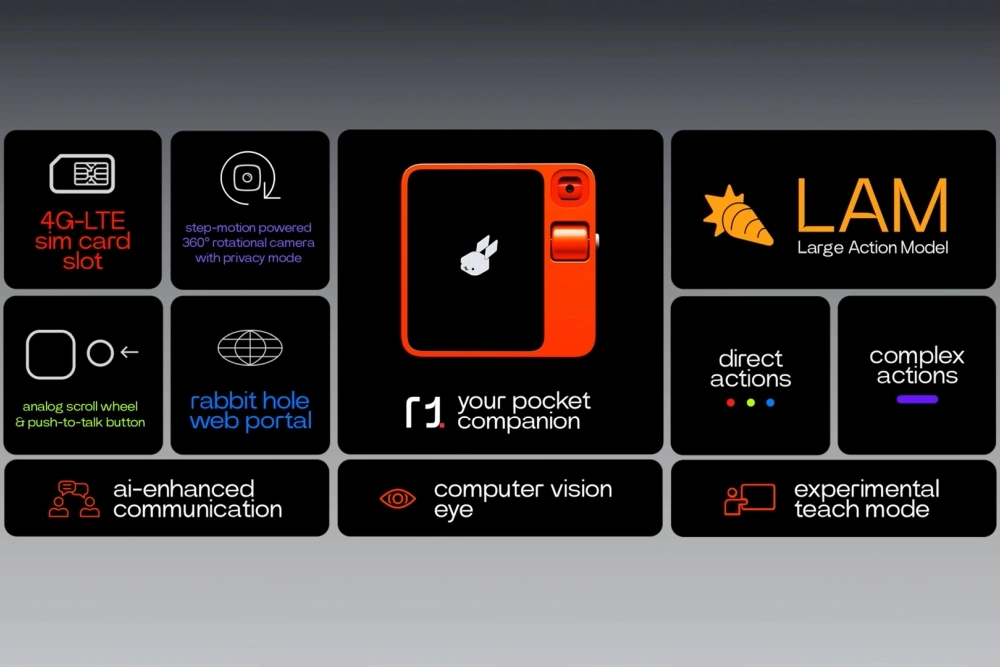

You can spot the Rabbit R1 from a mile away. It has one button on the right side, which you press and hold to ask it questions. Unlike the Humane AI Pin, which uses a projector, the Rabbit R1 has a built-in screen. It doesn’t have cellular connectivity but does include a SIM card slot next to the USB-C charging port.

It seems to send almost every request to the cloud, but the response time is noticeably quicker than the Humane AI Pin. While that’s not a high bar to clear, it’s still consistently faster.

Ask Questions, Get Answers

Want to know something? Just ask.

How far away is the moon?

[AI Voice] The average distance between Earth and the Moon is about 384,400 kilometers.

You can connect headphones via Bluetooth or turn up the built-in speaker (I did both). The screen shows your answer as text, along with the time and battery status. You’ll also notice the bouncing rabbit icon on the screen, which tells you the device is awake. To put it to sleep, press the button once, or it’ll do so automatically after a few seconds. Press the button again to wake it up, and the rabbit will be right there, waiting for you.

To get to the settings, there’s no button or gesture on the screen. Instead, you shake the device like an Etch A Sketch. Once you do that, you can use the scroll wheel to navigate up and down and select options with the button. That’s how you move around the interface.

This device also has two unique features: the scroll wheel and a swiveling camera. The scroll wheel is used to navigate the UI, which might seem strange, but I’ll explain why in a bit. The AI assistant is multimodal, meaning it can use vision to answer questions about what it sees. You double-tap to open the camera, and then press and hold to ask questions.

For example, I pointed it at my computer screen with a long email, asked for a summary, and it quickly provided one. It works the same way with articles. I think that’s pretty cool. But at the end of the day, it’s just an AI in a box, best at answering questions.

The Downsides

That said, this device has plenty of issues. The battery life is just as bad as the Humane Pin. It has a tiny 1,000mAh battery that drains way too quickly. It’s frustrating enough to carry around an extra device besides your phone, but this one dies in about four hours even when not in use. You end up charging it multiple times a day, and it’s still dead when you wake up. It’s exhausting.

It also takes 45 minutes to charge from 0% to 100%. Plus, it’s missing a lot of basic features. It can’t set alarms or timers, take photos or videos, send emails, or manage a calendar. There are so many simple things I’d want from an assistant that it just can’t do.

And, of course, as with many AI assistants, it still messes up. It confidently gives wrong answers, even when I know the right one. That’s one of the common downsides of this technology.

This device was designed by Teenage Engineering, and it really shows. The bright orange, quirky design is intentional and gives it a friendly vibe. They’re clearly big fans of analog controls. While the scroll wheel fits with that theme, it’s actually frustrating to use.

So first off, this thing sticks out a little at the back, which actually looks kind of cool. But the downside is that if it were super sensitive, it might scroll just from being placed on a table or something. To fix that, they’ve toned down the sensitivity of the scroll wheel, so it moves really slowly. Like, it takes quite a bit of scrolling just to move down one line in the settings. Plus, there’s no haptic feedback while scrolling, so it’s hard to get a sense of how far you’ve gone.

It’s not the end of the world though—you can get used to it. You use the button to select things… But here’s the problem: there’s no back button. So if you want to go up a level, you have to scroll all the way to the top every single time. That gets old pretty quickly.

And then, adjusting things like brightness or volume requires two hands. You have to select the setting with one hand, then hold the button while you scroll with the other hand. It works, but it’s definitely not a one-handed operation. You can learn to live with it, and it’s just one of those quirky features, I guess. But honestly, a lot of these issues would be solved if it had a touchscreen.

Now, what if I told you this does have a touchscreen, but you can barely use it for anything? It would be so much easier to just tap on a menu option or swipe back to the top of a list, but nope—none of that. The only thing you can use the touchscreen for is typing in terminal mode. When that’s enabled, you can rotate the device sideways, and a keyboard pops up for typing. You can even scroll through letters with the wheel, which is kind of neat. But why not let the touchscreen do more? Maybe they just didn’t want it to look too much like a smartphone. Who knows?

So what’s the point of all this? If it’s so similar to the last one, which wasn’t great either, why bother? Well, there are two main things they’re hoping will make this stand out: the price and the large action model.

The previous device, the Humane Pin, got a lot of flak because it was as expensive as a phone—$700, plus a $24/month subscription to keep it from becoming useless. It was just way too much. This one, though, costs $200 with no subscription. That’s a huge difference, and honestly, that could make all the difference for some people.

It’s easy to see that the $200 price tag doesn’t cover everything. To use it with cellular data, you’ll need to buy a separate SIM card. While Rabbit doesn’t charge a subscription for the device itself, you’ll still have to pay a monthly fee for data outside of Wi-Fi.

The unboxing experience is pretty minimal. It comes in a plain cardboard box with a plastic case that looks like a cassette tape, which can also serve as a stand. That’s it—no charging brick, no USB-C cable, no stickers, no instruction manual—nothing extra at all.

As for the R1 itself, it’s made of plastic. That doesn’t mean it’s flimsy, though—there’s no creaking or flexing. It’s just, well, plastic. The camera is basic, the speaker sounds cheap, and it runs on a low-end MediaTek chip—the same one used in the Moto G8 Power Lite, which is a budget phone. The battery life isn’t great, there’s no fast charging or wireless charging, and the only color option is a bright orange that’s so saturated it’s hard to capture properly in photos. Seriously, it’s the most neon orange you’ve ever seen in your life.

Like the Humane AI Pin, which also didn’t have apps, this device lacks any built-in apps as well. But what sets it apart is something they’re calling a “large action model.”

In simple terms, a large action model takes your words and turns them into actions, kind of like having it use apps for you the way a person would, based on what you tell it to do. It’s different from an API, which connects services in a more limited way. The idea here is to offer more flexibility and freedom, without the restrictions you might get with an API.

The idea behind this is pretty cool: it’s like having an app that operates just like a human would—using a mouse and keyboard, just like we do. You can think of it as a virtual agent. Honestly, I think it’s a really awesome concept. Large language models have been trained on tons of data to respond like humans, and some are really convincing. So, in theory, a large action model could use apps and services just like we do—whether it’s Spotify, Twitter, banking apps, or anything else.

It’s already pretty good at recognizing key elements in the interface, like play buttons or buy buttons. With enough training, it could get even better. The only problem is that it doesn’t have that much training data yet. Right now, they’ve only made four apps available for testing: Spotify, Uber, DoorDash, and Midjourney.

There’s an online platform called the Rabbit Hole where you can log in and try these apps. The Rabbit model can interact with them exactly how we’d expect—like playing a song on Spotify. You can ask it to play a specific song, and it’ll try to find it, showing you details on the screen so you can confirm or adjust what it’s doing. The concept works, but in practice, it’s a little rough. I’ve had it play the wrong song a few times, and I’ve seen others struggle with DoorDash too, which is pretty frustrating. I can’t imagine how annoying it would be if it messed up something on Uber. It’s clear that it needs more training, and that’s just with the four apps they’ve started with.

Rabbit says it has trained on 800 apps, but since they haven’t built the UI for them yet, those aren’t available on the platform. They’re working on it. They’ve also started working on something called a generative UI, which would let the system recognize what kind of app it is and create the right interface for it automatically. But that’s still in the works, so it’s not available yet.

If there’s another app or service you’d like your Rabbit to handle—maybe something for work or a super niche project you’ve come up with—they’ve mentioned this thing called “Teach Mode.” The idea is that the Rabbit watches you perform tasks on your mouse and keyboard, learns from it, and can then repeat your actions later. Sounds pretty cool, right? Unfortunately, Teach Mode isn’t available yet. It’s still in the works and won’t be ready until later this year.

So right now, the device only has the four basic apps we talked about earlier, and none of the promised features. So, what are we really doing here?

What Are We Doing Here?

I’m trying not to turn this into a rant, but it’s hard not to notice that tech companies are often releasing products that aren’t fully ready. It feels like they’re making stuff, rushing to put it out there, then promising to fix it later with updates. It used to be that you would build a product, then sell it. Now, it’s almost like they sell it first, release a half-finished version, and hope they can patch it up later. And that leads to a messy middle phase.

We’ve seen this trend in a lot of industries. It’s happening with video games—big studios release broken games and call them “alpha” versions, promising updates to fix the bugs, but they still charge full price. Cars are going the same way, where manufacturers release models without all the promised features, only for them to arrive later with software updates. Smartphones have been doing this for years, where you get a new phone and one or more features are missing at launch but will arrive in an update months later.

Now, with AI-based products, we’re seeing this trend hit its peak. The products we’re getting right now are far from the fully functional versions they promised. And the worst part? We’re paying full price for something that’s barely usable. That’s frustrating for buyers, but it’s also tough for reviewers. How do you review something that’s not even close to the finished product? Do you just hope that it’ll get better over time? It’s a messy situation.

What are we really doing here? On one hand, it’s amazing that tech products can get better over time. The stuff you buy today can be even better tomorrow, and that’s a huge shift from how things used to be. It’s definitely something to appreciate. But on the other hand, that means we’re often getting products that aren’t fully finished, and I have a feeling it’s only going to get worse before it gets better.

Looking Ahead

I’m personally really excited about the idea of a super personalized AI assistant that can handle everything a human assistant could. That’s the dream for me, and I’m hoping it becomes a reality. I’m also intrigued by how different companies are approaching it in their own ways. We’ll probably get there eventually, but let’s face it—it’s going to take a lot of time, effort, and technical work, plus a ton of data. I’ve said this before, but a truly good assistant needs to know everything about you—your preferences, where you are, what you’re doing, what you like. It’s a lot of data to manage.

When it comes to the Rabbit, I think the low price point is intentional. These types of products are tough to sell because you’re basically betting on what it could become in the future. Take the Pin, for example. You’re paying $700, plus $24 a month, just to take a chance that it’ll eventually live up to its promise. That’s a pretty big gamble. But with the Rabbit, at $200, it’s a much easier risk to take. It feels like a smaller investment with less to lose. And if it turns out to be amazing in a couple of years, it’ll feel worth it. Maybe some of those 800 apps will actually be really useful, or maybe the Teach Mode will do something with a single button press that you never thought possible. If that happens, it could be a game changer.

This reminds me of when Tesla started shipping cars with autopilot features. They were selling great electric cars with good range and a solid charging network, but the autopilot was more of a beta test. As more people used it, Tesla was collecting data from millions of miles on the road, which helped improve the software over time. That data gave them a head start in developing better autopilot systems.

I think the Rabbit is hoping to do the same thing—get as many units out there as possible so that real-world use can help train and improve the system. The problem, though, is that the Rabbit doesn’t have the same kind of immediate appeal. It’s a bit of a chicken-and-egg problem.

So, my advice? Buy the product for what it is right now, not what it might be in the future. That’s honestly the hardest thing to do, especially with something that promises so much. But that’s the advice I’d give. And we’ll also have to see what big companies like Google and Apple are planning to do in this space, probably later this year.

Time will tell.

RELATED POSTS

View all